AI's Growing Dependence on Clean and Anonymous Web Data

Max T

Mar 5, 2024

10 min read

Artificial intelligence is only as good as the data behind it, especially for customer support chatbots.

If your bot is trained on scattered, outdated, or irrelevant content, it’ll frustrate customers, not help them. But when it’s powered by clean, structured information from your own business — your help docs, product pages, and previous support chats — it becomes a real extension of your team.

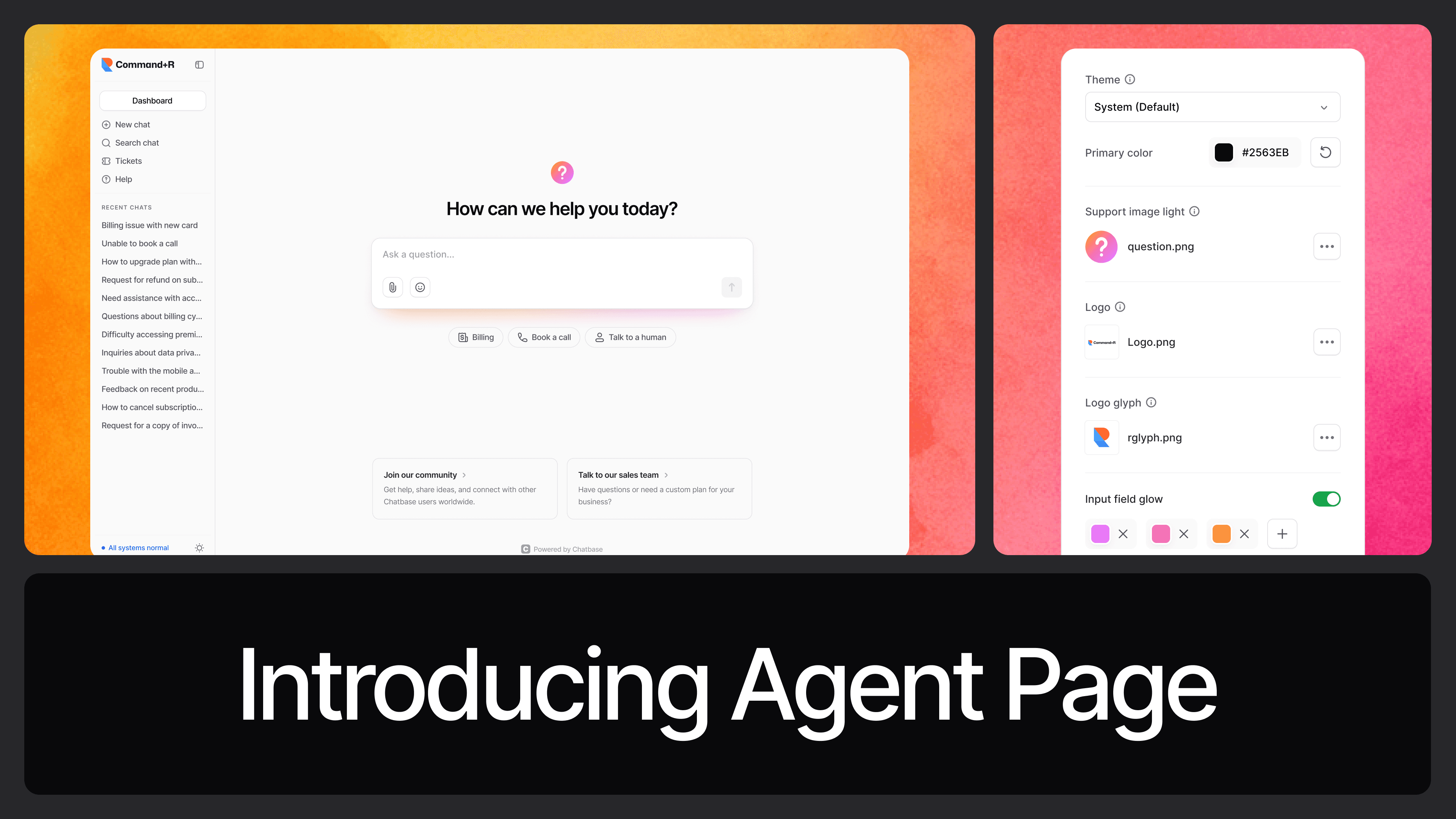

That’s the Chatbase approach.Instead of scraping anonymous data or stitching together random sources, Chatbase gives you a system to train AI chatbots using the exact information your customers already rely on — nothing more, nothing less.

Why Clean Data Is Critical to AI Accuracy

Dirty data or data that is biased, duplicated, irrelevant, or incomplete can seriously hinder AI performance. Systems trained on poor data may produce inaccurate results, make unethical decisions, or behave unpredictably. These consequences can impact real-world outcomes, such as product recommendations, hiring processes, or medical diagnoses.

In contrast, AI data quality requires clean data to ensure that AI models function as intended. It ensures algorithms reflect a balanced perspective, perform better across different scenarios, and are more likely to pass validation tests. Ensuring this level of data quality requires a well-structured collection strategy, and that’s where proxies come in.

Chatbots are only as helpful as the information they’ve been trained on. If the data behind the scenes is messy, outdated FAQs, conflicting product descriptions, or incomplete guides, the bot ends up giving wrong or inconsistent answers. And in support, that’s a dealbreaker.

Clean data isn’t just a nice-to-have; it’s what separates bots that solve problems from bots that escalate tickets.

With Chatbase, you decide exactly what the bot learns from. You upload your website content, documentation, help articles, whatever makes sense, and the chatbot sticks to it. That means fewer hallucinations, fewer frustrated customers, and a lot more confidence in automation.

The Value of Anonymous Data Collection

Many AI models rely on web data, such as consumer reviews, social media trends, news articles, and e-commerce listings. However, gathering this data at scale poses challenges. Without anonymization, IP addresses used for scraping are quickly flagged, throttled, or blocked. Websites employ anti-bot technology to prevent large-scale data collection.

Using anonymous proxies, AI development teams can mask their identity and avoid detection. This enables more stable and continuous data scraping and protects the privacy of both the scraper and the data sources. Anonymous collection reduces the likelihood of triggering defenses or violating privacy regulations.

Empower AI Data Collection

To meet the enormous data demands of modern AI, developers rely on proxy solutions that offer speed, reliability, and access to content globally. Whether scraping thousands of product listings or analyzing sentiment across multiple languages, proxies enable continuous access to the web at scale.

The choice of a design for a high-traffic, high-demand proxy is crucial. With features like changing IP addresses, dedicated internet speed, and better session management, these tools help with large tasks requiring quick responses. These are important for training AI systems that use a lot of data.

1. Supporting Diverse Data Needs Across Geographies

Global AI deployment requires global training data. A model that performs well in one region may underperform in another due to cultural, linguistic, or behavioral differences. For AI to be truly useful across markets, it must gather diverse and region-specific datasets during development.

Proxies with geo-targeting capabilities allow teams to simulate traffic from multiple countries, enabling access to localized content. This diversity in data strengthens the model’s versatility and minimizes bias tied to a single geography.

2. Preventing Data Contamination

AI models are vulnerable to data pollution when exposed to duplicated, misleading, or irrelevant content. These issues can confuse the learning algorithm and degrade performance over time.

Using premium proxy solutions allows developers to implement more innovative filtering mechanisms during collection. Proxies can help avoid honeypot traps, reduce duplicate scraping, and maintain clean extraction pipelines. This preemptive filtering significantly lowers the need for manual data cleanup post-collection, saving time, resources, and labor.

3. Compliance and Ethical Collection

As data privacy regulations continue to tighten around the globe, companies must be increasingly mindful of how they source their training data. Legal frameworks now govern how information is accessed, stored, and utilized. Proxy services play a critical role by enabling compliant data acquisition.

By masking origin IPs and employing secure, encrypted channels, proxies help developers meet legal requirements without compromising data quality. When building a sustainable and responsible AI system, reputable proxy providers also ensure the ethical sourcing of their IP pools.

4. Building Resilient AI Through Smarter Infrastructure

AI’s accuracy, fairness, and usability depend primarily on the strength of the data pipeline. Investing in smart infrastructure like anonymous and rotating proxies isn’t a luxury — it’s a necessity. These tools ensure that teams can gather data efficiently, at scale, and without triggering defenses that might halt the process.

Proxy technologies and architecture allow for heavy-duty tasks, delivering a stable solution for teams that need massive, clean, and secure data inputs. Whether your project focuses on natural language processing, machine learning, or behavioral prediction, a robust proxy backbone can significantly impact the success or failure of your AI system.

Data Fuels AI

AI’s future hinges on rich, clean, and varied data availability. As competition intensifies and models become more complex, gathering this data securely and ethically becomes paramount.

By using advanced options like Nebula proxy, developers can collect the anonymous and unbiased data their AI systems need without running afoul of restrictions or compromising user privacy. More brilliant data collection isn’t optional in artificial intelligence — it’s foundational. And proxies are at the heart of making it possible.

Why Clean Input = Better Support Outcomes

Clean data doesn’t just make your AI smarter — it improves the entire experience.

When your bot has the right inputs, it doesn’t just answer more accurately. It solves problems faster. It frees up your team to focus on complex issues instead of repetitive ones.

With Chatbase, clean input means:

- No hallucinated answers

- No contradictory replies

- No off-brand language

Just fast, confident, and reliable responses based on the content you’ve approved. And when your business evolves, you simply update the data — no re-coding or rebuilding needed.

→ Use your own data to build a chatbot

Share this article: